Updated git for above change

https://github.com/phenomlab/nodebb-harmony-threading/commit/14a4e277521d83d219065ffb14154fd5f5cfac69

OK.

I resume in details.

1- Stop nodebb

2- Stop iframely

3- Stop nginx

4- Install redis server : sudo apt install redis-server

5- Change nodebb Config.json file (can you verifiy this synthax please ? nodebb documentation tell “database”: 0 and not “database”: 5 - but maybe it’s just a name and i can use the same as mongo like “database”: nodebb , I moved the port directive) :

{

"url": "https://XX-XX.net",

"secret": "XXXXXXXXXXXXXXXX",

"database": "mongo",

"port": [4567, 4568,4569],

"mongo": {

"host": "127.0.0.1",

"port": "27017",

"username": "XXXXXXXXXXX",

"password": "XXXXXXXXXXX",

"database": "nodebb",

"uri": ""

},

"redis": {

"host":"127.0.0.1",

"port":"6379",

"database": 5

}

}

6- Change nginx.conf :

# add the below block for nodeBB clustering

upstream io_nodes {

ip_hash;

server 127.0.0.1:4567;

server 127.0.0.1:4568;

server 127.0.0.1:4569;

}

server {

server_name XX-XX.net www.XX-XX.net;

listen XX.XX.XX.X;

listen [XX:XX:XX:XX::];

root /home/XX-XX/nodebb;

index index.php index.htm index.html;

access_log /var/log/virtualmin/XX-XX.net_access_log;

error_log /var/log/virtualmin/XX-XX.net_error_log;

# add the below block which will force all traffic into the cluster when referenced with @nodebb

location @nodebb {

proxy_pass http://io_nodes;

}

location / {

limit_req zone=flood burst=100 nodelay;

limit_conn ddos 10;

proxy_read_timeout 180;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $http_host;

proxy_set_header X-NginX-Proxy true;

# It's necessary to set @nodebb here so that the clustering works

proxy_pass @nodebb;

proxy_redirect off;

# Socket.IO Support

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

listen XX.XX.XX.XX:XXssl http2;

listen [XX:XX:XX:XX::]:443 ssl http2;

ssl_certificate /etc/ssl/virtualmin/166195366750642/ssl.cert;

ssl_certificate_key /etc/ssl/virtualmin/166195366750642/ssl.key;

if ($scheme = http) {

rewrite ^/(?!.well-known)(.*) "https://XX-XX.net/$1" break;

}

}

7- restart redis server systemctl restart redis-server.service

8- Restart nginx

9- Restart iframely

10- Restart nodebb

11- test configuration

@DownPW said in NODEBB: Nginx error performance & High CPU:

5- Change nodebb Config.json file (can you verifiy this synthax please ? nodebb documentation tell “database”: 0 and not “database”: 5,

All fine from my perspective - no real need to stop iFramely, but ok. The database number doesn’t matter as long as it’s not being used. You can use 0 if you wish - it’s in use on my side, hence 5.

@DownPW I’d add another set of steps here. When you move the sessions away from mongo to redis you are going to encounter issues logging in as the session tables will no longer match meaning none of your users will be able to login

To resolve this issue

Review https://sudonix.com/topic/249/invalid-csrf-on-dev-install and implement all steps - note that you will also need the below string when connecting to mongodb

mongo -u admin -p <password> --authenticationDatabase=admin

Obviously, substitute <password> with the actual value. So in summary:

mondogb consoleuse sudonix;db.objects.update({_key: "config"}, {$set: {cookieDomain: ""}});quit() into the mongodb shellHmm ok when perform these steps ?

@DownPW After you’ve setup the cluster and restarted NodeBB

@phenomlab said in NODEBB: Nginx error performance & High CPU:

@crazycells said in NODEBB: Nginx error performance & High CPU:

4567, 4568, and 4569… Is your NodeBB set up this way?

It’s not (I set their server up). Sudonix is not configured this way either, but from memory, this also requires

redisto handle the session data. I may configure this site to do exactly that.

yes, you might be right about the necessity. We have redis installed.

@DownPW yes, this is the place to start:

@DownPW since you pointed it out, I just remembered. Since we know when this crowd will come and be online on our forum, that particular day, we switch off iframely and all preview plugins. That also helps to open the pages faster.

@phenomlab a general but related question. Since opening three ports help, is it possible to increase this number? For example, can we run 5 ports NodeBB at the same time to smooth the web page experience; or is “3” goldilocks number for maximum efficiency?

@crazycells It’s not necessarily the “Goldilocks” standard - it really depends on the system resources you have available. You could easily extend it as long as you allow for the additional port(s) in the nginx.conf file also.

Personally, I don’t see the need for more than 3 though.

Ok redis is ok now. Thanks for your help

I would like know to obtain the connecting clients Real IP on Nginx log.

I read I have need ngx_http_realip_module for nginx but not active by default but I don’t know if virtualmin have this module enabled.

@DownPW said in NODEBB: Nginx error performance & High CPU:

Ok redis is ok now. Thanks for your help

I would like know to obtain the connecting clients Real IP on Nginx log.

I read I have need ngx_http_realip_modulefor nginx but not active by default but I don’t know if virtualmin have this module enabled.

EDIT: OK it will be enabled by default on virtualmin :

nginx -v

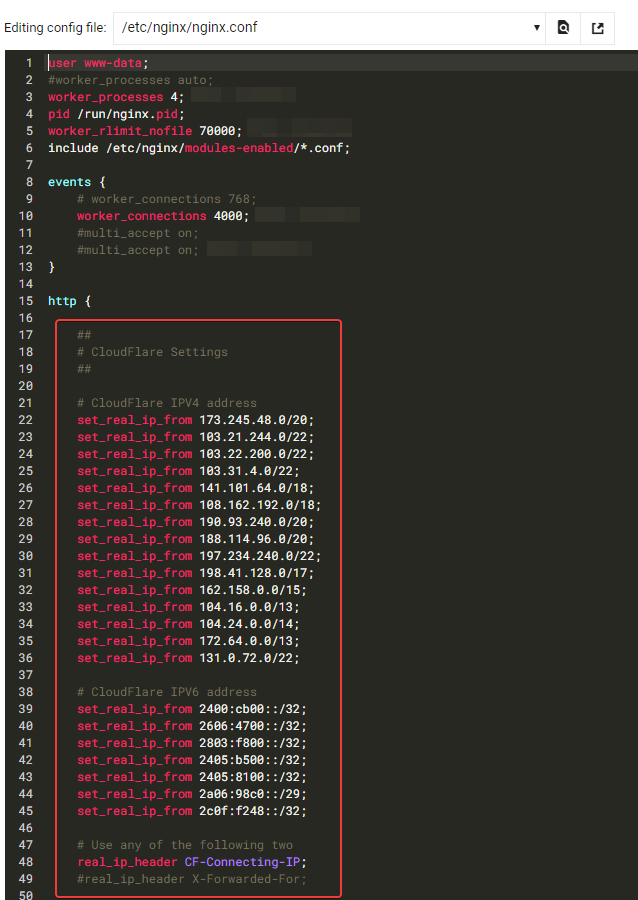

I have activate ngx_http_realip_module on /etc/nginx/nginx.conf on http block like this :

It seems to be good for you ?

@DownPW yes, that looks fine.

@DownPW I suspect that’s a failure of the socket server to talk to redis, but the NodeBB Devs would need to confirm.

Seems to be better with some scaling fix for redis on redis.conf. I haven’t seen the message yet since the changes I made

# I increase it to the value of /proc/sys/net/core/somaxconn

tcp-backlog 4096

# I'm uncommenting because it can slow down Redis. Uncommented by default !!!!!!!!!!!!!!!!!!!

#save 900 1

#save 300 10

#save 60 10000

If you have other Redis optimizations. I take all your advice