@DownPW any update?

Cloudflare bot fight mode and Google search

-

Ever since I moved the prior domain here to .Org, I’ve experienced issues with Google Search Console in the sense that the entire website is no longer crawled.

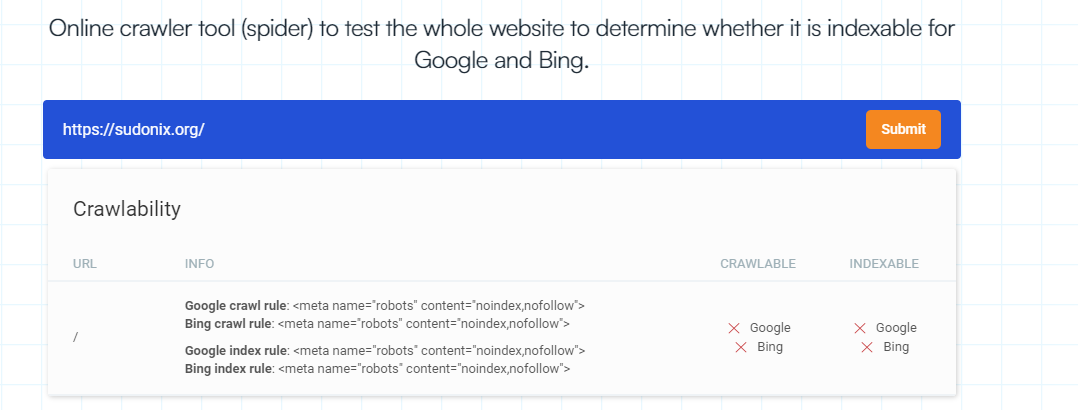

After some intensive investigation, it would appear that Cloudflare’s Bot Fight Mode is the cause. Essentially, this tool works at a

jslevel and blocks anything it considers to be suspicious - including Google’s crawler. Cloudflare will tell you that you can exclude the crawler meaning it is permitted to access. However, they omit one critical element - you need to have a paid plan to do so.With the Bot Fight Mode enabled, the website cannot be crawled

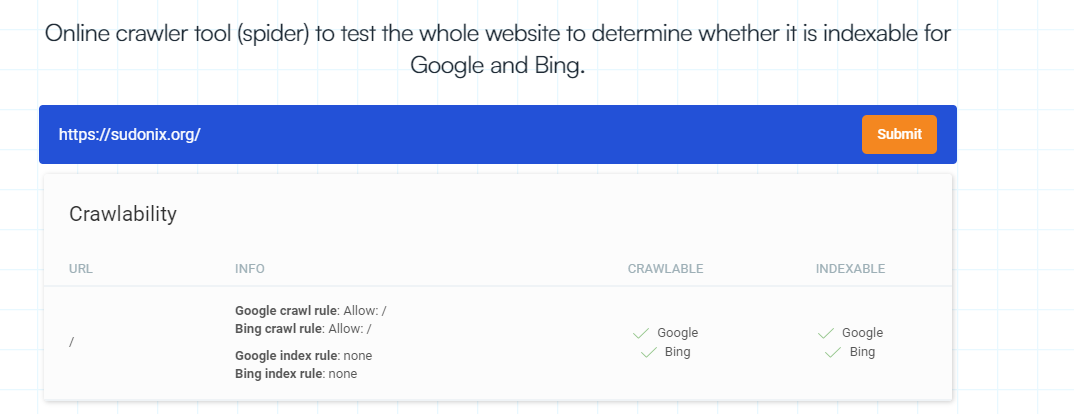

And with it disabled

This also appears to be a well known issue

https://community.cloudflare.com/t/bot-fight-mode-blocking-googlebot-bingbot/333980/6

However, it looks like Cloudflare simply “responded” by allowing you to edit the BFM ruleset in a paid plan, but if you are using the free mode, the only solution you have is to disable it completely. I’ve done this, and yet, my site still doesn’t index!

I’ve completed disabled Cloudflare (DNS only) for now to see if the situation improves.

-

Edit - I’ve re-enabled CF on this domain, but left BFM disabled

-

Coming back here with an update. I’ve actually disabled CF on this domain. It’s been off for a couple of weeks, and honestly, there’s zero difference.

It’s going to remain that way I think…

-

I’m not a systems guy at all, so in basic terms why is Cloudflare used?

Yesterday someone had problem with their server and I took a look at it but was out of my depth with it. Some nginx issues, which I wasn’t sure how to fix

In simple terms if you buy a domain, and have your own server, can you point one to another without using Cloudflare, or Nginx? -

@Panda said in Cloudflare bot fight mode and Google search:

I’m not a systems guy at all, so in basic terms why is Cloudflare used?

Cloudflare is used to act as a gateway or a proxy to your website. This has the immediate benefit of hiding your true IP address, making DDoS attacks much harder. Cloudflare isn’t a CDN (Content Delivery Network) in the traditional sense (although most people seem to think it is, but it doesn’t cache HTML content by default).

Cloudflare offers numerous performance and security enhancements along with Layer 7 DDoS protection. Essentially, you can think of it as a security gateway where all traffic is examined for threats before being allowed through.

However, in the same ilk, cyber criminals also hide behind Cloudflare to make their domain names look legitimate.

@Panda said in Cloudflare bot fight mode and Google search:

In simple terms if you buy a domain, and have your own server, can you point one to another without using Cloudflare, or Nginx?

Yes, of course. You simply use DNS to create associated (A, WWW, etc) records to point to the IP address that will be hosting the content. Most people “think” they need Cloudflare, when actually, they don’t. The free package has limitations, such as the amount of page rules you can create, and other features not being available that are in the Pro upwards (USD 20.00 per month - and it’s licensed per domain so you can’t just buy pro for example and use all of your domains in it) plan.

As a final point, Cloudflare actually slows down traffic by adding extra checks, and headers, and ultimately also increases TTFB (Time to First Byte). It’s basically a myth, but one everyone seems to have bought into. You can get the same level of protection with Open Source tooling and 80% best practice implementation if you want to invest the time.

-

and what about nginx?

Might be me being dumb, but the linux file structure is confusing and I never know where to go to edit this nginx stuff, or what its doing. -

@Panda said in Cloudflare bot fight mode and Google search:

and what about nginx?

NGINX is an web server application in it’s own right (borne out of the 10k issue with Apache where it suffers locks at around 10,000 concurrent sessions) which has a low footprint, yet is scalable no matter what you throw at it.

@Panda said in Cloudflare bot fight mode and Google search:

Might be me being dumb, but the linux file structure is confusing and I never know where to go to edit this nginx stuff, or what its doing.

Linux uses the EXT file system (whereas Windows uses NTFS), and has it’s origins in UNIX. This means that Linux would use a path like

/var/log/whereas windows will usec:\windows\system32for example. The Linux file system isn’t confusing, but can appear daunting to the uninitiated.NGINX under Linux is normally located in

/etc/nginxbut this is dependant on the Linux distribution (for example, under Ubuntu this would apply, but not always the case with Debian or Red Hat). -

It is recommended to use nginx with docker OpenResty, the isolation of docker makes the environment very clean!I use the 1panel panel to manage these, and the visual management makes it so much easier for me!

-

@veronikya said in Cloudflare bot fight mode and Google search:

It is recommended to use nginx with docker OpenResty, the isolation of docker makes the environment very clean!

That’s a good question. The advantage of docker is the ability to isolate, but in my view, it’s clunky, and not necessary - particularly when you need two applications to talk to each other, or you want to add a website to an existing NGINX host for example - it’s not possible to add a docker instance to an existing server and this is what makes it unappealing for me.

Yes, the environment is “clean”, but not practical in most cases.

-

@phenomlab docker modifications are a pain in the ass, one wrong operation can result in total data loss, I made that mistake once and now I stick to manual deployment for important services

-

undefined phenomlab forked this topic on

undefined phenomlab forked this topic on

-

@phenomlab We’re in the same boat, brother, which is why I choose to return to my Hosting NS after experiencing issues with even those tiny search engine bots. The issue depends on whether you want your website to resemble a corporate firm with few pages and a money-handling function; in that case, you can choose strong security, but for a community, it’s the opposite. But congrats on your site’s good ranking!

-

@veronikya said in Cloudflare bot fight mode and Google search:

docker modifications are a pain in the ass,

I couldn’t have put that better myself - such an accurate analogy. I too have “been there” with this pain factor, and I swore I’d never do it again.

-

undefined phenomlab referenced this topic on

undefined phenomlab referenced this topic on