@Hari Really? Can you elaborate a bit more here?

Adjusting HSTS settings for public wifi's

-

Hello there! Yesterday, I visited IKEA and connected to public Wi-Fi. Google and other websites worked as expected, but when I tried to access my own website, it didn’t load for some reason. I received an HSTS error and had to switch to mobile data to access my site.

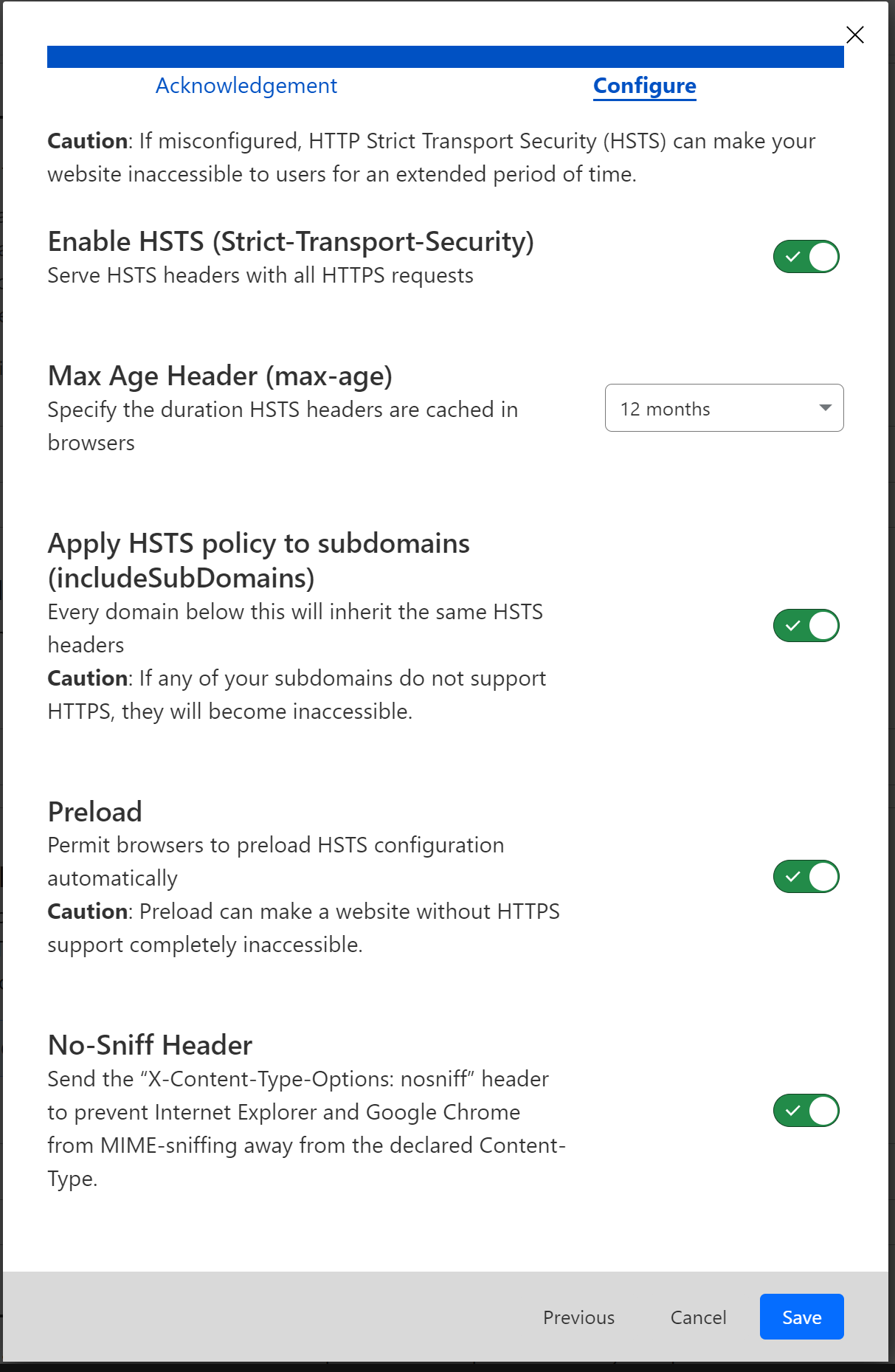

I understand we can’t just turn off HSTS since we already said browsers that we will use HSTS for next 12 months

I’ve been using these cloudflare settings for the past two years. If there are any adjustments that need to be made to ensure my site functions in public Wi-Fi areas, please let me know.

-

@Hari You already have the correct settings here. It’s more likely to be an issue with the Wi-Fi configuration at Ikea than an issue with CF or your own site. You can test this from another public Wi-Fi access point to either prove or disprove this theory.

I would certainly not make any changes without validating this as I’ve mentioned above. If it does prove problematic from a completely different connection source, then fair enough, it needs review.

-

@phenomlab thanks for the reply, i will test this again when i visit ikea and also using the railway station wifi. for now lets mark this discussion as solved

-

undefined Hari has marked this topic as solved on

-

@Hari Ok, no issues. Keep me posted…

Did this solution help you?

-

-

SSL certificates

Solved Configure -

-

-

-

-

-